The more data that you have and the larger the number of users that need to access this data, the greater the benefits of caching become. Decide when to cache dataĬaching can dramatically improve performance, scalability, and availability. The following sections describe in more detail the considerations for designing and using a cache. The requirement to implement a separate cache service might add complexity to the solution.The cache is slower to access because it is no longer held locally to each application instance.There are two main disadvantages of the shared caching approach: You can easily scale the cache by adding more servers. The underlying infrastructure determines the location of the cached data in the cluster. An application instance simply sends a request to the cache service.

#Shared cache vs private cache software

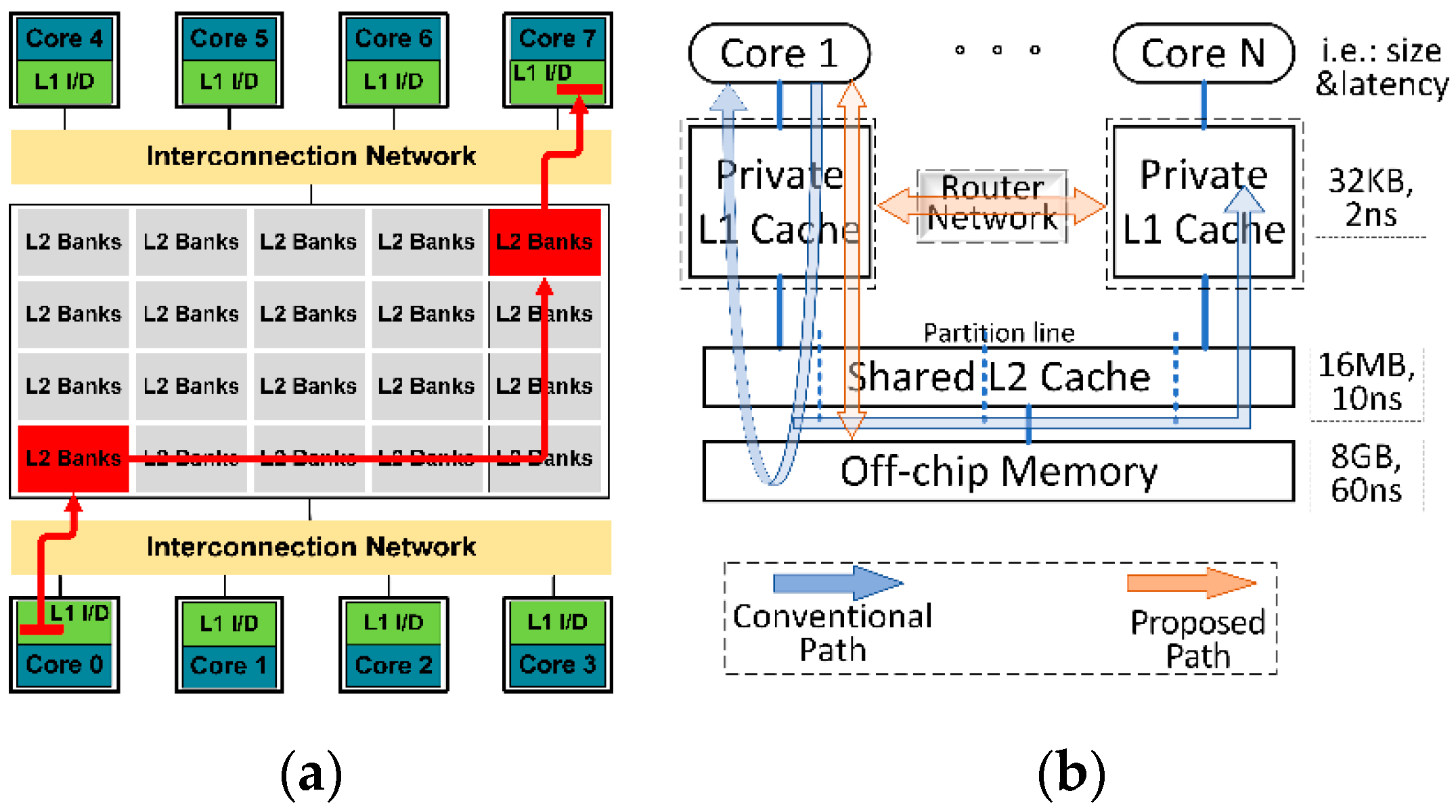

Many shared cache services are implemented by using a cluster of servers and use software to distribute the data across the cluster transparently. It does this by locating the cache in a separate location, typically hosted as part of a separate service, as shown in Figure 2.Īn important benefit of the shared caching approach is the scalability it provides. Shared caching ensures that different application instances see the same view of cached data. Using a shared cache can help alleviate concerns that data might differ in each cache, which can occur with in-memory caching. Therefore, the same query performed by these instances can return different results, as shown in Figure 1.įigure 1: Using an in-memory cache in different instances of an application.

If this data is not static, it is likely that different application instances hold different versions of the data in their caches. Think of a cache as a snapshot of the original data at some point in the past. If you have multiple instances of an application that uses this model running concurrently, each application instance has its own independent cache holding its own copy of the data. This will be slower to access than data held in memory, but should still be faster and more reliable than retrieving data across a network. If you need to cache more information than is physically possible in memory, you can write cached data to the local file system. It can also provide an effective means for storing modest amounts of static data, since the size of a cache is typically constrained by the amount of memory available on the machine hosting the process.

#Shared cache vs private cache code

It's held in the address space of a single process and accessed directly by the code that runs in that process. The most basic type of cache is an in-memory store. Server-side caching is done by the process that provides the business services that are running remotely. Client-side caching is done by the process that provides the user interface for a system, such as a web browser or desktop application. In both cases, caching can be performed client-side and server-side. Using a shared cache, serving as a common source that can be accessed by multiple processes and machines.Using a private cache, where data is held locally on the computer that's running an instance of an application or service.It's far away when network latency can cause access to be slow.ĭistributed applications typically implement either or both of the following strategies when caching data:.It's subject to a high level of contention.It's slow compared to the speed of the cache.

If this fast data storage is located closer to the application than the original source, then caching can significantly improve response times for client applications by serving data more quickly.Ĭaching is most effective when a client instance repeatedly reads the same data, especially if all the following conditions apply to the original data store:

It does this by temporarily copying frequently accessed data to fast storage that's located close to the application. Caching is a common technique that aims to improve the performance and scalability of a system.

0 kommentar(er)

0 kommentar(er)